Jane Noyes, Andrew Booth, Margaret Cargo, Kate Flemming, Angela Harden, Janet Harris, Ruth Garside, Karin Hannes, Tomás Pantoja, James Thomas

Key Points:

- A qualitative evidence synthesis (commonly referred to as QES) can add value by providing decision makers with additional evidence to improve understanding of intervention complexity, contextual variations, implementation, and stakeholder preferences and experiences.

- A qualitative evidence synthesis can be undertaken and integrated with a corresponding intervention review; or

- Undertaken using a mixed-method design that integrates a qualitative evidence synthesis with an intervention review in a single protocol.

- Methods for qualitative evidence synthesis are complex and continue to develop. Authors should always consult current methods guidance at methods.cochrane.org/qi.

This chapter should be cited as: Noyes J, Booth A, Cargo M, Flemming K, Harden A, Harris J, Garside R, Hannes K, Pantoja T, Thomas J. Chapter 21: Qualitative evidence. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook.

21.1 Introduction

The potential contribution of qualitative evidence to decision making is well-established (Glenton et al 2016, Booth 2017, Carroll 2017). A synthesis of qualitative evidence can inform understanding of how interventions work by:

- increasing understanding of a phenomenon of interest (e.g. women’s conceptualization of what good antenatal care looks like);

- identifying associations between the broader environment within which people live and the interventions that are implemented;

- increasing understanding of the values and attitudes toward, and experiences of, health conditions and interventions by those who implement or receive them; and

- providing a detailed understanding of the complexity of interventions and implementation, and their impacts and effects on different subgroups of people and the influence of individual and contextual characteristics within different contexts.

The aim of this chapter is to provide authors (who already have experience of undertaking qualitative research and qualitative evidence synthesis) with additional guidance on undertaking a qualitative evidence synthesis that is subsequently integrated with an intervention review. This chapter draws upon guidance presented in a series of six papers published in the Journal of Clinical Epidemiology (Cargo et al 2018, Flemming et al 2018, Harden et al 2018, Harris et al 2018, Noyes et al 2018a, Noyes et al 2018b) and from a further World Health Organization series of papers published in BMJ Global Health, which extend guidance to qualitative evidence syntheses conducted within a complex intervention and health systems and decision making context (Booth et al 2019a, Booth et al 2019b, Flemming et al 2019, Noyes et al 2019, Petticrew et al 2019).The qualitative evidence synthesis and integration methods described in this chapter supplement Chapter 17 on methods for addressing intervention complexity. Authors undertaking qualitative evidence syntheses should consult these papers and chapters for more detailed guidance.

21.2 Designs for synthesizing and integrating qualitative evidence with intervention reviews

There are two main designs for synthesizing qualitative evidence with evidence of the effects of interventions:

-

Sequential reviews: where one or more existing intervention review(s) has been published on a similar topic, it is possible to do a sequential qualitative evidence synthesis and then integrate its findings with those of the intervention review to create a mixed-method review. For example, Lewin and colleagues (Lewin et al (2010) and Glenton and colleagues (Glenton et al (2013) undertook sequential reviews of lay health worker programmes using separate protocols and then integrated the findings.

- Convergent mixed-methods review: where no pre-existing intervention review exists, it is possible to do a full convergent ‘mixed-methods’ review where the trials and qualitative evidence are synthesized separately, creating opportunities for them to ‘speak’ to each other during development, and then integrated within a third synthesis. For example, Hurley and colleagues (Hurley et al (2018) undertook an intervention review and a qualitative evidence synthesis following a single protocol.

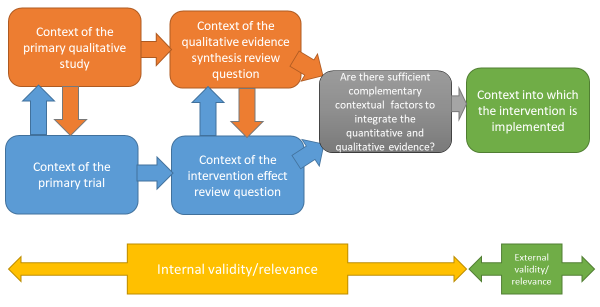

It is increasingly common for sequential and convergent reviews to be conducted by some or all of the same authors; if not, it is critical that authors working on the qualitative evidence synthesis and intervention review work closely together to identify and create sufficient points of integration to enable a third synthesis that integrates the two reviews, or the conduct of a mixed-method review (Noyes et al 2018a) (see Figure 21.2.a). This consideration also applies where an intervention review has already been published and there is no prior relationship with the qualitative evidence synthesis authors. We recommend that at least one joint author works across both reviews to facilitate development of the qualitative evidence synthesis protocol, conduct of the synthesis, and subsequent integration of the qualitative evidence synthesis with the intervention review within a mixed-methods review.

Figure 21.2.a Considering context and points of contextual integration with the intervention review or within a mixed-method review

21.3 Defining qualitative evidence and studies

We use the term ‘qualitative evidence synthesis’ to acknowledge that other types of qualitative evidence (or data) can potentially enrich a synthesis, such as narrative data derived from qualitative components of mixed-method studies or free text from questionnaire surveys. We would not, however, consider a questionnaire survey to be a qualitative study and qualitative data from questionnaires should not usually be privileged over relevant evidence from qualitative studies. When thinking about qualitative evidence, specific terminology is used to describe the level of conceptual and contextual detail. Qualitative evidence that includes higher or lower levels of conceptual detail is described as ‘rich’ or ‘poor’. Associated terms ‘thick’ or ‘thin’ are best used to refer to higher or lower levels of contextual detail. Review authors can potentially develop a stronger synthesis using rich and thick qualitative evidence but, in reality, they will identify diverse conceptually rich and poor and contextually thick and thin studies. Developing a clear picture of the type and conceptual richness of available qualitative evidence strongly influences the choice of methodology and subsequent methods. We recommend that authors undertake scoping searches to determining the type and richness of available qualitative evidence before selecting their methodology and methods.

A qualitative study is a research study that uses a qualitative method of data collection and analysis. Review authors should include the studies that enable them to answer their review question. When selecting qualitative studies in a review about intervention effects, two types of qualitative study are available: those that collect data from the same participants as the included trials, known as ‘trial siblings’; and those that address relevant issues about the intervention, but as separate items of research – not connected to any included trials. Both can provide useful information, with trial sibling studies obviously closer in terms of their precise contexts to the included trials (Moore et al 2015), and non-sibling studies possibly contributing perspectives not present in the trials (Noyes et al 2016b).

21.4 Planning a qualitative evidence synthesis linked to an intervention review

The Cochrane Qualitative and Implementation Methods Group (QIMG) website provides links to practical guidance and key steps for authors who are considering a qualitative evidence synthesis (methods.cochrane.org/qi). The RETREAT framework outlines seven key considerations that review authors should systematically work through when planning a review (Booth et al 2016, Booth et al 2018) (Box 21.4.a). Flemming and colleagues (Flemming et al (2019) further explain how to factor in such considerations when undertaking a qualitative evidence synthesis within a complex intervention and decision making context when complexity is an important consideration.

Box 21.4.a RETREAT considerations when selecting an appropriate method for qualitative synthesis

|

Review question – first, consider the complexity of the review question. Which elements contribute most to complexity (e.g. the condition, the intervention or the context)?

Which elements should be prioritized as the focal point for attention? (Squires et al 2013, Kelly et al 2017).

Epistemology – consider the philosophical foundations of the primary studies. Would it be appropriate to favour a method such as thematic synthesis that it is less reliant on epistemological considerations? (Barnett-Page and Thomas 2009).

Time frame – consider what type of qualitative evidence synthesis will be feasible and manageable within the time frame available (Booth et al 2016).

Resources – consider whether the ambition of the review matches the available resources. Will the extent of the scope and the sampling approach of the review need to be limited? (Benoot et al 2016, Booth et al 2016).

Expertise – consider access to expertise, both within the review team and among a wider group of advisors. Does the available expertise match the qualitative evidence synthesis approach chosen? (Booth et al 2016).

Audience and purpose – consider the intended audience and purpose of the review. Does the approach to question formulation, the scope of the review and the intended outputs meet their needs? (Booth et al 2016). Type of data – consider the type of data present in typical studies for inclusion. To what extent are candidate studies conceptually rich and contextually thick in their detail? |

21.5 Question development

The review question is critical to development of the qualitative evidence synthesis (Harris et al 2018). Question development affords a key point for integration with the intervention review. Complementary guidance supports novel thinking about question development, application of question development frameworks and the types of questions to be addressed by a synthesis of qualitative evidence (Cargo et al 2018, Harris et al 2018, Noyes et al 2018a, Booth et al 2019b, Flemming et al 2019).

Research questions for quantitative reviews are often mapped using structures such as PICO. Some qualitative reviews adopt this structure, or use an adapted variation of such a structure (e.g. SPICE (Setting, Perspective, Intervention or Phenomenon of Interest, Comparison, Evaluation) or SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type); (Cooke et al 2012). Booth and colleagues (Booth et al (2019b) propose an extended question framework (PerSPecTIF) to describe both wider context and immediate setting that is particularly suited to qualitative evidence synthesis and complex intervention reviews (see Table 21.5.a).

Detailed attention to the question and specification of context at an early stage is critical to many aspects of qualitative synthesis (see Petticrew et al (2019) and Booth et al (2019a) for a more detailed discussion). By specifying the context a review team is able to identify opportunities for integration with the intervention review, or opportunities for maximizing use and interpretation of evidence as a mixed-method review progresses (see Figure 21.2.a), and informs both the interpretation of the observed effects and assessment of the strength of the evidence available in addressing the review question (Noyes et al 2019). Subsequent application of GRADE CERQual (Lewin et al 2015, Lewin et al 2018), an approach to assess the confidence in synthesized qualitative findings, requires further specification of context in the review question.

Table 21.5.a PerSPecTIF Question formulation framework for qualitative evidence syntheses (Booth et al (2019b). Reproduced with permission of BMJ Publishing Group

|

Per |

S |

P |

E |

(C) |

Ti |

F |

|

Perspective |

Setting |

Phenomenon of interest/ Problem |

Environment |

Comparison (optional) |

Time/ Timing |

Findings |

|

From the perspective of a pregnant woman |

In the setting of rural communities |

How does facility-based care |

Within an environment of poor transport infrastructure and distantly located facilities |

Compare with traditional birth attendants at home |

Up to and including delivery |

In relation to the woman’s perceptions and experiences? |

21.6 Questions exploring intervention implementation

Additional guidance is available on formulation of questions to understand and assess intervention implementation (Cargo et al 2018). A strong understanding of how an intervention is thought to work, and how it should be implemented in practice, will enable a critical consideration of whether any observed lack of effect might be due to a poorly conceptualized intervention (i.e. theory failure) or a poor intervention implementation (i.e. implementation failure). Heterogeneity needs to be considered for both the underlying theory and the ways in which the intervention was implemented. An a priori scoping review (Levac et al 2010), concept analysis (Walker and Avant 2005), critical review (Grant and Booth 2009) or textual narrative synthesis (Barnett-Page and Thomas 2009) can be undertaken to classify interventions and/or to identify the programme theory, logic model or implementation measures and processes. The intervention Complexity Assessment Tool for Systematic Reviews iCAT_SR (Lewin et al 2017) may be helpful in classifying complexity in interventions and developing associated questions.

An existing intervention model or framework may be used within a new topic or context. The ‘best-fit framework’ approach to synthesis (Carroll et al 2013) can be used to establish the degree to which the source context (from where the framework was derived) resembles the new target context (see Figure 21.2.a). In the absence of an explicit programme theory and detail of how implementation relates to outcomes, an a priori realist review, meta-ethnography or meta-interpretive review can be undertaken (Booth et al 2016). For example, Downe and colleagues (Downe et al (2016) undertook an initial meta-ethnography review to develop an understanding of the outcomes of importance to women receiving antenatal care.

However, these additional activities are very resource-intensive and are only recommended when the review team has sufficient resources to supplement the planned qualitative evidence syntheses with an additional explanatory review. Where resources are less plentiful a review team could engage with key stakeholders to articulate and develop programme theory (Kelly et al 2017, De Buck et al 2018).

21.6.1 Using logic models and theories to support question development

Review authors can develop a more comprehensive representation of question features through use of logic models, programme theories, theories of change, templates and pathways (Anderson et al 2011, Kneale et al 2015, Noyes et al 2016a) (see also Chapter 17, Section 17.2.1 and Chapter 2, Section 2.5.1). These different forms of social theory can be used to visualize and map the research question, its context, components, influential factors and possible outcomes (Noyes et al 2016a, Rehfuess et al 2018).

21.6.2 Stakeholder engagement

Finally, review authors need to engage stakeholders, including consumers affected by the health issue and interventions, or likely users of the review from clinical or policy contexts. From the preparatory stage, this consultation can ensure that the review scope and question is appropriate and resulting products address implementation concerns of decision makers (Kelly et al 2017, Harris et al 2018).

21.7 Searching for qualitative evidence

In comparison with identification of quantitative studies (see also Chapter 4), procedures for retrieval of qualitative research remain relatively under-developed. Particular challenges in retrieval are associated with non-informative titles and abstracts, diffuse terminology, poor indexing and the overwhelming prevalence of quantitative studies within data sources (Booth et al 2016).

Principal considerations when planning a search for qualitative studies, and the evidence that underpins them, have been characterized using a 7S framework from Sampling and Sources through Structured questions, Search procedures, Strategies and filters and Supplementary strategies to Standards for Reporting (Booth et al 2016).

A key decision, aligned to the purpose of the qualitative evidence synthesis is whether to use the comprehensive, exhaustive approaches that characterize quantitative searches or whether to use purposive sampling that is more sensitive to the qualitative paradigm (Suri 2011). The latter, which is used when the intent is to generate an interpretative understanding, for example, when generating theory, draws upon a versatile toolkit that includes theoretical sampling, maximum variation sampling and intensity sampling. Sources of qualitative evidence are more likely to include book chapters, theses and grey literature reports than standard quantitative study reports, and so a search strategy should place extra emphasis on these sources. Local databases may be particularly valuable given the criticality of context (Stansfield et al 2012).

Another key decision is whether to use study filters or simply to conduct a topic-based search where qualitative studies are identified at the study selection stage. Search filters for qualitative studies lack the specificity of their quantitative counterparts. Nevertheless, filters may facilitate efficient retrieval by study type (e.g. qualitative (Rogers et al 2018) or mixed methods (El Sherif et al 2016) or by perspective (e.g. patient preferences (Selva et al 2017)) particularly where the quantitative literature is overwhelmingly large and thus increases the number needed to retrieve. Poor indexing of qualitative studies makes citation searching (forward and backward) and the Related Articles features of electronic databases particularly useful (Cooper et al 2017). Further guidance on searching for qualitative evidence is available (Booth et al 2016, Noyes et al 2018a). The CLUSTER method has been proposed as a specific named method for tracking down associated or sibling reports (Booth et al 2013). The BeHEMoTh approach has been developed for identifying explicit use of theory (Booth and Carroll 2015).

21.7.1 Searching for process evaluations and implementation evidence

Four potential approaches are available to identify process evaluations.

-

Identify studies at the point of study selection rather than through tailored search strategies. This involves conducting a sensitive topic search without any study design filter (Harden et al 1999), and identifying all study designs of interest during the screening process. This approach can be feasible when a review question involves multiple publication types (e.g. randomized trial, qualitative research and economic evaluations), which then do not require separate searches.

-

Restrict included process evaluations to those conducted within randomized trials, which can be identified using standard search filters (see Chapter 4, Section 4.4.7). This method relies on reports of process evaluations also describing the surrounding randomized trial in enough detail to be identified by the search filter.

-

Use unevaluated filter terms (such as ‘process evaluation’, ‘program(me) evaluation’, ‘feasibility study’, ‘implementation’ or ‘proof of concept’ etc) to retrieve process evaluations or implementation data. Approaches using strings of terms associated with the study type or purpose are considered experimental. There is a need to develop and test such filters. It is likely that such filters may be derived from the study type (process evaluation), the data type (process data) or the application (implementation) (Robbins et al 2011).

- Minimize reliance on topic-based searching and rely on citations-based approaches to identify linked reports, published or unpublished, of a particular study (Booth et al 2013) which may provide implementation or process data (Bonell et al 2013).

More detailed guidance is provided by Cargo and colleagues (Cargo et al (2018).

21.8 Assessing methodological strengths and limitations of qualitative studies

Assessment of the methodological strengths and limitations of qualitative research remains contested within the primary qualitative research community (Garside 2014). However, within systematic reviews and evidence syntheses it is considered essential, even when studies are not to be excluded on the basis of quality (Carroll et al 2013). One review found almost 100 appraisal tools for assessing primary qualitative studies (Munthe-Kaas et al 2019). Limitations included a focus on reporting rather than conduct and the presence of items that are separate from, or tangential to, consideration of study quality (e.g. ethical approval).

Authors should distinguish between assessment of study quality and assessment of risk of bias by focusing on assessment of methodological strengths and limitations as a marker of study rigour (what we term a ‘risk to rigour’ approach (Noyes et al 2019)). In the absence of a definitive risk to rigour tool, we recommend that review authors select from published, commonly used and validated tools that focus on the assessment of the methodological strengths and limitations of qualitative studies (see Box 21.8.a). Pragmatically, we consider a ‘validated’ tool as one that has been subjected to evaluation. Issues such as inter-rater reliability are afforded less importance given that identification of complementary or conflicting perspectives on risk to rigour is considered more useful than achievement of consensus per se (Noyes et al 2019).

The CASP tool for qualitative research (as one example) maps onto the domains in Box 21.8.a (CASP 2013). Tools not meeting the criterion of focusing on assessment of methodological strengths and limitations include those that integrate assessment of the quality of reporting (such as scoring of the title and abstract, etc) into an overall assessment of methodological strengths and limitations. As with other risk of bias assessment tools, we strongly recommend against the application of scores to domains or calculation of total quality scores. We encourage review authors to discuss the studies and their assessments of ‘risk to rigour’ for each paper and how the study’s methodological limitations may affect review findings (Noyes et al 2019). We further advise that qualitative ‘sensitivity analysis’, exploring the robustness of the synthesis and its vulnerability to methodologically limited studies, be routinely applied regardless of the review authors’ overall confidence in synthesized findings (Carroll et al 2013). Evidence suggests that qualitative sensitivity analysis is equally advisable for mixed methods studies from which the qualitative component is extracted (Verhage and Boels 2017).

Box 21.8.a Example domains that provide an assessment of methodological strengths and limitations to determine study rigour

|

Clear aims and research question

Congruence between the research aims/question and research design/method(s)

Rigour of case and or participant identification, sampling and data collection to address the question

Appropriate application of the method

Richness/conceptual depth of findings

Exploration of deviant cases and alternative explanations

Reflexivity of the researchers* *Reflexivity encourages qualitative researchers and reviewers to consider the actual and potential impacts of the researcher on the context, research participants and the interpretation and reporting of data and findings (Newton et al 2012). Being reflexive entails making conflicts of interest transparent, discussing the impact of the reviewers and their decisions on the review process and findings and making transparent any issues discussed and subsequent decisions. |

Adapted from Noyes et al (2019) and Alvesson and Sköldberg (2009)

21.8.1 Additional assessment of methodological strengths and limitations of process evaluation and intervention implementation evidence

Few assessment tools explicitly address rigour in process evaluation or implementation evidence. For qualitative primary studies, the 8-item process evaluation tool developed by the EPPI-Centre (Rees et al 2009, Shepherd et al 2010) can be used to supplement tools selected to assess methodological strengths and limitations and risks to rigour in primary qualitative studies. One of these items, a question on usefulness (framed as ‘how well the intervention processes were described and whether or not the process data could illuminate why or how the interventions worked or did not work’) offers a mechanism for exploring process mechanisms (Cargo et al 2018).

21.9 Selecting studies to synthesize

Decisions about inclusion or exclusion of studies can be more complex in qualitative evidence syntheses compared to reviews of trials that aim to include all relevant studies. Decisions on whether to include all studies or to select a sample of studies depend on a range of general and review specific criteria that Noyes and colleagues (Noyes et al (2019) outline in detail. The number of qualitative studies selected needs to be consistent with a manageable synthesis, and the contexts of the included studies should enable integration with the trials in the effectiveness analysis (see Figure 21.2.a). The guiding principle is transparency in the reporting of all decisions and their rationale.

21.10 Selecting a qualitative evidence synthesis and data extraction method

Authors will typically find that they cannot select an appropriate synthesis method until the pool of available qualitative evidence has been thoroughly scoped. Flexible options concerning choice of method may need to be articulated in the protocol.

The INTEGRATE-HTA guidance on selecting methodology and methods for qualitative evidence synthesis and health technology assessment offers a useful starting point when selecting a method of synthesis (Booth et al 2016, Booth et al 2018). Some methods are designed primarily to develop findings at a descriptive level and thus directly feed into lines of action for policy and practice. Others hold the capacity to develop new theory (e.g. meta-ethnography and theory building approaches to thematic synthesis). Noyes and colleagues (Noyes et al (2019) and Flemming and colleagues (Flemming et al (2019) elaborate on key issues for consideration when selecting a method that is particularly suited to a Cochrane Review and decision making context (see Table 21.10.a). Three qualitative evidence synthesis methods (thematic synthesis, framework synthesis and meta-ethnography) are recommended to produce syntheses that can subsequently be integrated with an intervention review or analysis.

Table 21.10.a Recommended methods for undertaking a qualitative evidence synthesis for subsequent integration with an intervention review, or as part of a mixed-method review (adapted from an original source developed by convenors (Flemming et al 2019, Noyes et al 2019))

|

Methodology |

Explanation |

|

Likely to be most suitable |

|

|

Thematic synthesis (Thomas and Harden 2008) |

Pros: Most accessible form of synthesis. Clear approach, can be used with ‘thin’ data to produce descriptive themes and with ‘thicker’ data to develop descriptive themes in to more in-depth analytic themes. Themes are then integrated within the quantitative synthesis. Cons: May be limited in interpretive ‘power’ and risks over-simplistic use and thus not truly informing decision making such as guidelines. Complex synthesis process that requires an experienced team. Theoretical findings may combine empirical evidence, expert opinion and conjecture to form hypotheses. More work is needed on how GRADE CERQual to assess confidence in synthesized qualitative findings (see Section 21.12) can be applied to theoretical findings. May lack clarity on how higher-level findings translate into actionable points. |

|

Requires some caution in its use |

|

|

Framework synthesis (Oliver et al 2008, Dixon-Woods 2011) Best-fit framework synthesis (Carroll et al 2011) |

Pros: Works well within reviews of complex interventions by accommodating complexity within the framework, including representation of theory. The framework allows a clear mechanism for integration of qualitative and quantitative evidence in an aggregative way – see Noyes et al (2018a). Works well where there is broad agreement about the nature of interventions and their desired impacts. Cons: Requires identification, selection and justification of framework. A framework may be revealed as inappropriate only once extraction/synthesis is underway. Risk of simplistically forcing data into a framework for expedience. |

|

Requires more caution in its use |

|

|

Meta-ethnography (Noblit and Hare 1988) |

Pros: Primarily interpretive synthesis method leading to creation of descriptive as well as new high order constructs. Descriptive and theoretical findings can help inform decision making such as guidelines. Explicit reporting standards have been developed. Cons: Complex methodology and synthesis process that requires highly experienced team. Can take more time and resources than other methodologies. Theoretical findings may combine empirical evidence, expert opinion and conjecture to form hypotheses. May not satisfy requirements for an audit trail (although new reporting guidelines will help overcome this (France et al 2019). More work is needed to determine how CERQual can be applied to theoretical findings. May be unclear how higher-level findings translate into actionable points. |

21.11 Data extraction

Qualitative findings may take the form of quotations from participants, subthemes and themes identified by the study’s authors, explanations, hypotheses or new theory, or observational excerpts and author interpretations of these data (Sandelowski and Barroso 2002). Findings may be presented as a narrative, or summarized and displayed as tables, infographics or logic models and potentially located in any part of the paper (Noyes et al 2019).

Methods for qualitative data extraction vary according to the synthesis method selected. Data extraction is not sequential and linear; often, it involves moving backwards and forwards between review stages. Review teams will need regular meetings to discuss and further interrogate the evidence and thereby achieve a shared understanding. It may be helpful to draw on a key stakeholder group to help in interpreting the evidence and in formulating key findings. Additional approaches (such as subgroup analysis) can be used to explore evidence from specific contexts further.

Irrespective of the review type and choice of synthesis method, we consider it best practice to extract detailed contextual and methodological information on each study and to report this information in a table of ‘Characteristics of included studies’ (see Table 21.11.a). The template for intervention description and replication TIDieR checklist (Hoffmann et al 2014) and ICAT_SR tool may help with specifying key information for extraction (Lewin et al 2017). Review authors must ensure that they preserve the context of the primary study data during the extraction and synthesis process to prevent misinterpretation of primary studies (Noyes et al 2019).

Table 21.11.a Contextual and methodological information for inclusion within a table of ‘Characteristics of included studies’. From Noyes et al (2019). Reproduced with permission of BMJ Publishing Group

|

Data extraction field |

Information extracted |

|

Context and participants |

Important elements of study context, relevant to addressing the review question and locating the context of the primary study; for example, the study setting, population characteristics, participants and participant characteristics, the intervention delivered (if appropriate), etc. |

|

Study design and methods used |

Methodological design and approach taken by the study; methods for identifying the sample recruitment; the specific data collection and analysis methods utilized; and any theoretical models used to interpret or contextualize the findings. |

Noyes and colleagues (Noyes et al (2019) provide additional guidance and examples of the various methods of data extraction. It is usual for review authors to select one method. In summary, extraction methods can be grouped as follows.

- Using a bespoke universal, standardized or adapted data extraction template Review authors can develop their own review-specific data extraction template, or select a generic data extraction template by study type (e.g. templates developed by the National Institute for Health and Clinical Excellence (National Institute for Health Care Excellence 2012).

- Using an a priori theory or predetermined framework to extract data Framework synthesis, and its subvariant ‘Best Fit’ Framework approach, involve extracting data from primary studies against an a priori framework in order to better understand a phenomenon of interest (Carroll et al 2011, Carroll et al 2013). For example, Glenton and colleagues (Glenton et al (2013) extracted data against a modified SURE Framework (2011) to synthesize factors affecting the implementation of lay health worker interventions. The SURE framework enumerates possible factors that may influence the implementation of health system interventions (SURE (Supporting the Use of Research Evidence) Collaboration 2011, Glenton et al 2013). Use of the ‘PROGRESS’ (place of residence, race/ethnicity/culture/language, occupation, gender/sex, religion, education, socioeconomic status, and social capital) framework also helps to ensure that data extraction maintains an explicit equity focus (O'Neill et al 2014). A logic model can also be used as a framework for data extraction.

- Using a software program to code original studies inductively A wide range of software products have been developed by systematic review organizations (such as EPPI-Reviewer (Thomas et al 2010)). Most software for the analysis of primary qualitative data – such as NVivo (www.qsrinternational.com/nvivo/home) and others – can be used to code studies in a systematic review (Houghton et al 2017). For example, one method of data extraction and thematic synthesis involves coding the original studies using a software program to build inductive descriptive themes and a theoretical explanation of phenomena of interest (Thomas and Harden 2008). Thomas and Harden (2008) provide a worked example to demonstrate coding and developing a new understanding of children’s choices and motivations to eating fruit and vegetables from included primary studies.

21.12 Assessing the confidence in qualitative synthesized findings

The GRADE system has long featured in assessing the certainty of quantitative findings and application of its qualitative counterpart, GRADE-CERQual, is recommended for Cochrane qualitative evidence syntheses (Lewin et al 2015). CERQual has four components (relevance, methodological limitations, adequacy and coherence) which are used to formulate an overall assessment of confidence in the synthesized qualitative finding. Guidance on its components and reporting requirements have been published in a series in Implementation Science (Lewin et al 2018).

21.13 Methods for integrating the qualitative evidence synthesis with an intervention review

A range of methods and tools is available for data integration or mixed-method synthesis (Harden et al 2018, Noyes et al 2019). As noted at the beginning of this chapter, review authors can integrate a qualitative evidence synthesis with an existing intervention review published on a similar topic (sequential approach), or conduct a new intervention review and qualitative evidence syntheses in parallel before integration (convergent approach). Irrespective of whether the qualitative synthesis is sequential or convergent to the intervention review, we recommend that qualitative and quantitative evidence be synthesized separately using appropriate methods before integration (Harden et al 2018). The scope for integration can be more limited with a pre-existing intervention review unless review authors have access to the data underlying the intervention review report.

Harden and colleagues and Noyes and colleagues outline the following methods and tools for integration with an intervention review (Harden et al 2018, Noyes et al 2019):

- Juxtaposing findings in a matrix Juxtaposition is driven by the findings from the qualitative evidence synthesis (e.g. intervention components related to the acceptability or feasibility of the interventions) and these findings form one side of the matrix. Findings on intervention effects (e.g. improves outcome, no difference in outcome, uncertain effects) form the other side of the matrix. Quantitative studies are grouped according to findings on intervention effects and the presence or absence of features specified by the hypotheses generated from the qualitative synthesis (Candy et al 2011). Observed patterns in the matrix are used to explain differences in the findings of the quantitative studies and to identify gaps in research (van Grootel et al 2017). (See, for example, (Ames et al 2017, Munabi-Babigumira et al 2017, Hurley et al 2018)

- Analysing programme theory Theories articulating how interventions are expected to work are analysed. Findings from quantitative studies, testing the effects of interventions, and from qualitative and process evaluation evidence are used together to examine how the theories work in practice (Greenhalgh et al 2007). The value of different theories is assessed or new/revised theory developed. Factors that enhance or reduce intervention effectiveness are also identified.

- Using logic models or other types of conceptual framework A logic model (Glenton et al 2013) or other type of conceptual framework, which represents the processes by which an intervention produces change provides a common scaffold for integrating findings across different types of evidence (Booth and Carroll 2015). Frameworks can be specified a priori from the literature or through stakeholder engagement or newly developed during the review. Findings from quantitative studies testing the effects of interventions and those from qualitative evidence are used to develop and/or further refine the model.

- Testing hypotheses derived from syntheses of qualitative evidence Quantitative studies are grouped according to the presence or absence of the proposition specified by the hypotheses to be tested and subgroup analysis is used to explore differential findings on the effects of interventions (Thomas et al 2004).

- Qualitative comparative analysis (QCA) Findings from a qualitative synthesis are used to identify the range of features that are important for successful interventions, and the mechanisms through which these features operate. A QCA then tests whether or not the features are associated with effective interventions (Kahwati et al 2016). The analysis unpicks multiple potential pathways to effectiveness accommodating scenarios where the same intervention feature is associated both with effective and less effective interventions, depending on context. QCA offers potential for use in integration; unlike the other methods and tools presented here it does not yet have sufficient methodological guidance available. However, exemplar reviews using QCA are available (Thomas et al 2014, Harris et al 2015, Kahwati et al 2016).

Review authors can use the above methods in combination (e.g. patterns observed through juxtaposing findings within a matrix can be tested using subgroup analysis or QCA). Analysing programme theory, using logic models and QCA would require members of the review team with specific skills in these methods. Using subgroup analysis and QCA are not suitable when limited evidence is available (Harden et al 2018, Noyes et al 2019). (See also Chapter 17 on intervention complexity.)

21.14 Reporting the protocol and qualitative evidence synthesis

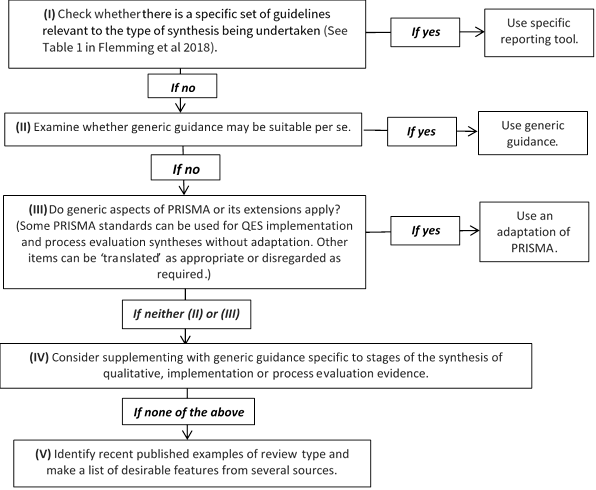

Reporting standards and tools designed for intervention reviews (such as Cochrane’s MECIR standards (http://methods.cochrane.org/mecir) or the PRISMA Statement (Liberati et al 2009), may not be appropriate for qualitative evidence syntheses or an integrated mixed-method review. Additional guidance on how to choose, adapt or create a hybrid reporting tool is provided as a 5-point ‘decision flowchart’ (Figure 21.14.a) (Flemming et al 2018). Review authors should consider whether: a specific set of reporting guidance is available (e.g. eMERGe for meta-ethnographies (France et al 2015)); whether generic guidance (e.g. ENTREQ (Tong et al 2012)) is suitable; or whether additional checklists or tools are appropriate for reporting a specific aspect of the review.

Figure 21.14.a Decision flowchart for choice of reporting approach for syntheses of qualitative, implementation or process evaluation evidence (Flemming et al 2018). Reproduced with permission of Elsevier

21.15 Chapter information

Authors: Jane Noyes, Andrew Booth, Margaret Cargo, Kate Flemming, Angela Harden, Janet Harris, Ruth Garside, Karin Hannes, Tomás Pantoja, James Thomas

Acknowledgements: This chapter replaces Chapter 20 in the first edition of this Handbook (2008) and subsequent Version 5.2. We would like to thank the previous Chapter 20 authors Jennie Popay and Alan Pearson. Elements of this chapter draw on previous supplemental guidance produced by the Cochrane Qualitative and Implementation Methods Group Convenors, to which Simon Lewin contributed.

Funding: JT is supported by the National Institute for Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care North Thames at Barts Health NHS Trust. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health.

21.16 References

Ames HM, Glenton C, Lewin S. Parents' and informal caregivers' views and experiences of communication about routine childhood vaccination: a synthesis of qualitative evidence. Cochrane Database of Systematic Reviews 2017; 2: CD011787.

Anderson LM, Petticrew M, Rehfuess E, Armstrong R, Ueffing E, Baker P, Francis D, Tugwell P. Using logic models to capture complexity in systematic reviews. Research Synthesis Methods 2011; 2: 33-42.

Barnett-Page E, Thomas J. Methods for the synthesis of qualitative research: a critical review. BMC Medical Research Methodology 2009; 9: 59.

Benoot C, Hannes K, Bilsen J. The use of purposeful sampling in a qualitative evidence synthesis: a worked example on sexual adjustment to a cancer trajectory. BMC Medical Research Methodology 2016; 16: 21.

Bonell C, Jamal F, Harden A, Wells H, Parry W, Fletcher A, Petticrew M, Thomas J, Whitehead M, Campbell R, Murphy S, Moore L. Public Health Research. Systematic review of the effects of schools and school environment interventions on health: evidence mapping and synthesis. Southampton (UK): NIHR Journals Library; 2013.

Booth A, Harris J, Croot E, Springett J, Campbell F, Wilkins E. Towards a methodology for cluster searching to provide conceptual and contextual "richness" for systematic reviews of complex interventions: case study (CLUSTER). BMC Medical Research Methodology 2013; 13: 118.

Booth A, Carroll C. How to build up the actionable knowledge base: the role of 'best fit' framework synthesis for studies of improvement in healthcare. BMJ Quality and Safety 2015; 24: 700-708.

Booth A, Noyes J, Flemming K, Gerhardus A, Wahlster P, van der Wilt GJ, Mozygemba K, Refolo P, Sacchini D, Tummers M, Rehfuess E. Guidance on choosing qualitative evidence synthesis methods for use in health technology assessment for complex interventions 2016. https://www.integrate-hta.eu/wp-content/uploads/2016/02/Guidance-on-choosing-qualitative-evidence-synthesis-methods-for-use-in-HTA-of-complex-interventions.pdf

Booth A. Qualitative evidence synthesis. In: Facey K, editor. Patient involvement in Health Technology Assessment. Singapore: Springer; 2017. p. 187-199.

Booth A, Noyes J, Flemming K, Gehardus A, Wahlster P, Jan van der Wilt G, Mozygemba K, Refolo P, Sacchini D, Tummers M, Rehfuess E. Structured methodology review identified seven (RETREAT) criteria for selecting qualitative evidence synthesis approaches. Journal of Clinical Epidemiology 2018; 99: 41-52.

Booth A, Moore G, Flemming K, Garside R, Rollins N, Tuncalp Ö, Noyes J. Taking account of context in systematic reviews and guidelines considering a complexity perspective. BMJ Global Health 2019a; 4: e000840.

Booth A, Noyes J, Flemming K, Moore G, Tuncalp Ö, Shakibazadeh E. Formulating questions to address the acceptability and feasibility of complex interventions in qualitative evidence synthesis. BMJ Global Health 2019b; 4: e001107.

Candy B, King M, Jones L, Oliver S. Using qualitative synthesis to explore heterogeneity of complex interventions. BMC Medical Research Methodology 2011; 11: 124.

Cargo M, Harris J, Pantoja T, Booth A, Harden A, Hannes K, Thomas J, Flemming K, Garside R, Noyes J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 4: methods for assessing evidence on intervention implementation. Journal of Clinical Epidemiology 2018; 97: 59-69.

Carroll C, Booth A, Cooper K. A worked example of "best fit" framework synthesis: a systematic review of views concerning the taking of some potential chemopreventive agents. BMC Medical Research Methodology 2011; 11: 29.

Carroll C, Booth A, Leaviss J, Rick J. "Best fit" framework synthesis: refining the method. BMC Medical Research Methodology 2013; 13: 37.

Carroll C. Qualitative evidence synthesis to improve implementation of clinical guidelines. BMJ 2017; 356: j80.

CASP. Making sense of evidence: 10 questions to help you make sense of qualitative research: Public Health Resource Unit, England; 2013. http://media.wix.com/ugd/dded87_29c5b002d99342f788c6ac670e49f274.pdf.

Cooke A, Smith D, Booth A. Beyond PICO: the SPIDER tool for qualitative evidence synthesis. Qualitative Health Research 2012; 22: 1435-1443.

Cooper C, Booth A, Britten N, Garside R. A comparison of results of empirical studies of supplementary search techniques and recommendations in review methodology handbooks: a methodological review. Systematic Reviews 2017; 6: 234.

De Buck E, Hannes K, Cargo M, Van Remoortel H, Vande Veegaete A, Mosler HJ, Govender T, Vandekerckhove P, Young T. Engagement of stakeholders in the development of a Theory of Change for handwashing and sanitation behaviour change. International Journal of Environmental Research and Public Health 2018; 28: 8-22.

Dixon-Woods M. Using framework-based synthesis for conducting reviews of qualitative studies. BMC Medicine 2011; 9: 39.

Downe S, Finlayson K, Tuncalp, Metin Gulmezoglu A. What matters to women: a systematic scoping review to identify the processes and outcomes of antenatal care provision that are important to healthy pregnant women. BJOG: An International Journal of Obstetrics and Gynaecology 2016; 123: 529-539.

El Sherif R, Pluye P, Gore G, Granikov V, Hong QN. Performance of a mixed filter to identify relevant studies for mixed studies reviews. Journal of the Medical Library Association 2016; 104: 47-51.

Flemming K, Booth A, Hannes K, Cargo M, Noyes J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 6: reporting guidelines for qualitative, implementation, and process evaluation evidence syntheses. Journal of Clinical Epidemiology 2018; 97: 79-85.

Flemming K, Booth A, Garside R, Tuncalp O, Noyes J. Qualitative evidence synthesis for complex interventions and guideline development: clarification of the purpose, designs and relevant methods. BMJ Global Health 2019; 4: e000882.

France EF, Ring N, Noyes J, Maxwell M, Jepson R, Duncan E, Turley R, Jones D, Uny I. Protocol-developing meta-ethnography reporting guidelines (eMERGe). BMC Medical Research Methodology 2015; 15: 103.

France EF, Cunningham M, Ring N, Uny I, Duncan EAS, Jepson RG, Maxwell M, Roberts RJ, Turley RL, Booth A, Britten N, Flemming K, Gallagher I, Garside R, Hannes K, Lewin S, Noblit G, Pope C, Thomas J, Vanstone M, Higginbottom GMA, Noyes J. Improving reporting of Meta-Ethnography: The eMERGe Reporting Guidance BMC Medical Research Methodology 2019; 19: 25.

Garside R. Should we appraise the quality of qualitative research reports for systematic reviews, and if so, how? Innovation: The European Journal of Social Science Research 2014; 27: 67-79.

Glenton C, Colvin CJ, Carlsen B, Swartz A, Lewin S, Noyes J, Rashidian A. Barriers and facilitators to the implementation of lay health worker programmes to improve access to maternal and child health: qualitative evidence synthesis. Cochrane Database of Systematic Reviews 2013; 10: CD010414.

Glenton C, Lewin S, Norris S. Chapter 15: Using evidence from qualitative research to develop WHO guidelines. In: Norris S, editor. World Health Organization Handbook for Guideline Development. 2nd. ed. Geneva: WHO; 2016.

Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information and Libraries Journal 2009; 26: 91-108.

Greenhalgh T, Kristjansson E, Robinson V. Realist review to understand the efficacy of school feeding programmes. BMJ 2007; 335: 858.

Harden A, Oakley A, Weston R. A review of the effectiveness and appropriateness of peer-delivered health promotion for young people. London: Institute of Education, University of London; 1999.

Harden A, Thomas J, Cargo M, Harris J, Pantoja T, Flemming K, Booth A, Garside R, Hannes K, Noyes J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 5: methods for integrating qualitative and implementation evidence within intervention effectiveness reviews. Journal of Clinical Epidemiology 2018; 97: 70-78.

Harris JL, Booth A, Cargo M, Hannes K, Harden A, Flemming K, Garside R, Pantoja T, Thomas J, Noyes J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 2: methods for question formulation, searching, and protocol development for qualitative evidence synthesis. Journal of Clinical Epidemiology 2018; 97: 39-48.

Harris KM, Kneale D, Lasserson TJ, McDonald VM, Grigg J, Thomas J. School-based self management interventions for asthma in children and adolescents: a mixed methods systematic review (Protocol). Cochrane Database of Systematic Reviews 2015; 4: CD011651.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, Lamb SE, Dixon-Woods M, McCulloch P, Wyatt JC, Chan AW, Michie S. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014; 348: g1687.

Houghton C, Murphy K, Meehan B, Thomas J, Brooker D, Casey D. From screening to synthesis: using nvivo to enhance transparency in qualitative evidence synthesis. Journal of Clinical Nursing 2017; 26: 873-881.

Hurley M, Dickson K, Hallett R, Grant R, Hauari H, Walsh N, Stansfield C, Oliver S. Exercise interventions and patient beliefs for people with hip, knee or hip and knee osteoarthritis: a mixed methods review. Cochrane Database of Systematic Reviews 2018; 4: CD010842.

Kahwati L, Jacobs S, Kane H, Lewis M, Viswanathan M, Golin CE. Using qualitative comparative analysis in a systematic review of a complex intervention. Systematic Reviews 2016; 5: 82.

Kelly MP, Noyes J, Kane RL, Chang C, Uhl S, Robinson KA, Springs S, Butler ME, Guise JM. AHRQ series on complex intervention systematic reviews-paper 2: defining complexity, formulating scope, and questions. Journal of Clinical Epidemiology 2017; 90: 11-18.

Kneale D, Thomas J, Harris K. Developing and Optimising the Use of Logic Models in Systematic Reviews: Exploring Practice and Good Practice in the Use of Programme Theory in Reviews. PloS One 2015; 10: e0142187.

Levac D, Colquhoun H, O'Brien KK. Scoping studies: advancing the methodology. Implementation Science 2010; 5: 69.

Lewin S, Munabi-Babigumira S, Glenton C, Daniels K, Bosch-Capblanch X, van Wyk BE, Odgaard-Jensen J, Johansen M, Aja GN, Zwarenstein M, Scheel IB. Lay health workers in primary and community health care for maternal and child health and the management of infectious diseases. Cochrane Database of Systematic Reviews 2010; 3: CD004015.

Lewin S, Glenton C, Munthe-Kaas H, Carlsen B, Colvin CJ, Gulmezoglu M, Noyes J, Booth A, Garside R, Rashidian A. Using qualitative evidence in decision making for health and social interventions: an approach to assess confidence in findings from qualitative evidence syntheses (GRADE-CERQual). PLoS Medicine 2015; 12: e1001895.

Lewin S, Hendry M, Chandler J, Oxman AD, Michie S, Shepperd S, Reeves BC, Tugwell P, Hannes K, Rehfuess EA, Welch V, McKenzie JE, Burford B, Petkovic J, Anderson LM, Harris J, Noyes J. Assessing the complexity of interventions within systematic reviews: development, content and use of a new tool (iCAT_SR). BMC Medical Research Methodology 2017; 17: 76.

Lewin S, Booth A, Glenton C, Munthe-Kaas H, Rashidian A, Wainwright M, Bohren MA, Tuncalp O, Colvin CJ, Garside R, Carlsen B, Langlois EV, Noyes J. Applying GRADE-CERQual to qualitative evidence synthesis findings: introduction to the series. Implementation Science 2018; 13: 2.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ 2009; 339: b2700.

Moore G, Audrey S, Barker M, Bond L, Bonell C, Harderman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ 2015; 350: h1258.

Munabi-Babigumira S, Glenton C, Lewin S, Fretheim A, Nabudere H. Factors that influence the provision of intrapartum and postnatal care by skilled birth attendants in low- and middle-income countries: a qualitative evidence synthesis. Cochrane Database of Systematic Reviews 2017; 11: CD011558.

Munthe-Kaas H, Glenton C, Booth A, Noyes J, Lewin S. Systematic mapping of existing tools to appraise methodological strengths and limitations of qualitative research: first stage in the development of the CAMELOT tool. BMC Medical Research Methodology 2019; 19: 113.

National Institute for Health Care Excellence. NICE Process and Methods Guides. Methods for the Development of NICE Public Health Guidance. London: National Institute for Health and Care Excellence (NICE); 2012.

Newton BJ, Rothlingova Z, Gutteridge R, LeMarchand K, Raphael JH. No room for reflexivity? Critical reflections following a systematic review of qualitative research. Journal of Health Psychology 2012; 17: 866-885.

Noblit GW, Hare RD. Meta-ethnography: synthesizing qualitative studies. Newbury Park: Sage Publications, Inc; 1988.

Noyes J, Hendry M, Booth A, Chandler J, Lewin S, Glenton C, Garside R. Current use was established and Cochrane guidance on selection of social theories for systematic reviews of complex interventions was developed. Journal of Clinical Epidemiology 2016a; 75: 78-92.

Noyes J, Hendry M, Lewin S, Glenton C, Chandler J, Rashidian A. Qualitative "trial-sibling" studies and "unrelated" qualitative studies contributed to complex intervention reviews. Journal of Clinical Epidemiology 2016b; 74: 133-143.

Noyes J, Booth A, Flemming K, Garside R, Harden A, Lewin S, Pantoja T, Hannes K, Cargo M, Thomas J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 3: methods for assessing methodological limitations, data extraction and synthesis, and confidence in synthesized qualitative findings. Journal of Clinical Epidemiology 2018a; 97: 49-58.

Noyes J, Booth A, Cargo M, Flemming K, Garside R, Hannes K, Harden A, Harris J, Lewin S, Pantoja T, Thomas J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 1: introduction. Journal of Clinical Epidemiology 2018b; 97: 35-38.

Noyes J, Booth A, Moore G, Flemming K, Tuncalp O, Shakibazadeh E. Synthesising quantitative and qualitative evidence to inform guidelines on complex interventions: clarifying the purposes, designs and outlining some methods. BMJ Global Health 2019; 4 (Suppl 1): e000893.

O'Neill J, Tabish H, Welch V, Petticrew M, Pottie K, Clarke M, Evans T, Pardo Pardo J, Waters E, White H, Tugwell P. Applying an equity lens to interventions: using PROGRESS ensures consideration of socially stratifying factors to illuminate inequities in health. Journal of Clinical Epidemiology 2014; 67: 56-64.

Oliver S, Rees R, Clarke-Jones L, Milne R, Oakley A, Gabbay J, Stein K, Buchanan P, Gyte G. A multidimensional conceptual framework for analysing public involvement in health services research. Health Expectations 2008; 11: 72-84.

Petticrew M, Knai C, Thomas J, Rehfuess E, Noyes J, Gerhardus A, Grimshaw J, Rutter H. Implications of a complexity perspective for systematic reviews and guideline development in health decision making. BMJ Global Health 2019; 4 (Suppl 1): e000899.

Rees R, Oliver K, Woodman J, Thomas J. Children's views about obesity, body size, shape and weight. A systematic review. London: EPPI-Centre, Social Science Research Unit, Institute of Education, University of London; 2009.

Rehfuess EA, Booth A, Brereton L, Burns J, Gerhardus A, Mozygemba K, Oortwijn W, Pfadenhauer LM, Tummers M, van der Wilt GJ, Rohwer A. Towards a taxonomy of logic models in systematic reviews and health technology assessments: A priori, staged, and iterative approaches. Research Synthesis Methods 2018; 9: 13-24.

Robbins SCC, Ward K, Skinner SR. School-based vaccination: a systematic review of process evaluations. Vaccine 2011; 29: 9588-9599.

Rogers M, Bethel A, Abbott R. Locating qualitative studies in dementia on MEDLINE, EMBASE, CINAHL, and PsycINFO: a comparison of search strategies. Research Synthesis Methods 2018; 9: 579-586.

Sandelowski M, Barroso J. Finding the findings in qualitative studies. Journal of Nursing Scholarship 2002; 34: 213-219.

Selva A, Sola I, Zhang Y, Pardo-Hernandez H, Haynes RB, Martinez Garcia L, Navarro T, Schünemann H, Alonso-Coello P. Development and use of a content search strategy for retrieving studies on patients' views and preferences. Health and Quality of Life Outcomes 2017; 15: 126.

Shepherd J, Kavanagh J, Picot J, Cooper K, Harden A, Barnett-Page E, Jones J, Clegg A, Hartwell D, Frampton GK, Price A. The effectiveness and cost-effectiveness of behavioural interventions for the prevention of sexually transmitted infections in young people aged 13-19: a systematic review and economic evaluation. Health Technology Assessment 2010; 14: 1-206, iii-iv.

Squires JE, Valentine JC, Grimshaw JM. Systematic reviews of complex interventions: framing the review question. Journal of Clinical Epidemiology 2013; 66: 1215-1222.

Stansfield C, Kavanagh J, Rees R, Gomersall A, Thomas J. The selection of search sources influences the findings of a systematic review of people's views: a case study in public health. BMC Medical Research Methodology 2012; 12: 55.

SURE (Supporting the Use of Research Evidence) Collaboration. SURE Guides for Preparing and Using Evidence-based Policy Briefs: 5 Identifying and Addressing Barriers to Implementing the Policy Options. Version 2.1, updated November 2011. https://epoc.cochrane.org/sites/epoc.cochrane.org/files/public/uploads/SURE-Guides-v2.1/Collectedfiles/sure_guides.html

Suri H. Purposeful sampling in qualitative research synthesis. Qualitative Research Journal 2011; 11: 63-75.

Thomas J, Harden A, Oakley A, Oliver S, Sutcliffe K, Rees R, Brunton G, Kavanagh J. Integrating qualitative research with trials in systematic reviews. BMJ 2004; 328: 1010-1012.

Thomas J, Harden A. Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Medical Research Methodology 2008; 8: 45.

Thomas J, Brunton J, Graziosi S. EPPI-Reviewer 4.0: software for research synthesis [Software]. EPPI-Centre Software. Social Science Research Unit, Institute of Education, University of London UK; 2010. https://eppi.ioe.ac.uk/CMS/Default.aspx?alias=eppi.ioe.ac.uk/cms/er4&.

Thomas J, O'Mara-Eves A, Brunton G. Using qualitative comparative analysis (QCA) in systematic reviews of complex interventions: a worked example. Systematic Reviews 2014; 3: 67.

Tong A, Flemming K, McInnes E, Oliver S, Craig J. Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Medical Research Methodology 2012; 12: 181.

van Grootel L, van Wesel F, O'Mara-Eves A, Thomas J, Hox J, Boeije H. Using the realist perspective to link theory from qualitative evidence synthesis to quantitative studies: broadening the matrix approach. Research Synthesis Methods 2017; 8: 303-311.

Verhage A, Boels D. Critical appraisal of mixed methods research studies in a systematic scoping review on plural policing: assessing the impact of excluding inadequately reported studies by means of a sensitivity analysis. Quality & Quantity 2017; 51: 1449-1468.

Walker LO, Avant KC. Strategies for theory construction in nursing. Upper Saddle River (NJ): Pearson Prentice Hall; 2005.